GEO KPIs are likely to surface right after a really familiar moment: you’ve decided to speculate in GEO, aligned stakeholders, and began adapting content for generative search. And then someone asks the follow-up query: how are we actually going to measure this?

Visibility inside generative answers, citations in AI summaries, and brand inclusion without clicks all feel useful. However, without clear benchmarks, they’re hard to defend. This is why measuring GEO KPI performance becomes the true test of any GEO strategy.

We also know that the way in which people discover information has already shifted. According to research cited in How to Optimize Content for GEO and AEO in an AI-Native World, when a Google AI Overview appears, users click an organic result only 8% of the time, and 77% of ChatGPT users now depend on it for search, with nearly a 3rd trusting it greater than traditional search engines like google and yahoo. So, visibility is occurring, but not in the places our legacy dashboards were built to trace.

Another thing we all know is that generative search doesn’t reward rankings in isolation. It rewards authority, structure, and credibility in the meanwhile a solution is generated. As the Jasper ebook, we mentioned, puts it:

“That coveted #1 Google rating isn’t any longer enough by itself to achieve your full audience.”

The scale of this shift is difficult to disregard, after all. Gartner forecasts that by 2028, brands could see a 50% or greater decline in organic search traffic because of AI-generated answers, while zero-click searches already account for around 60% of searches in the US and Europe.

So generative search metrics help teams understand whether their brand is being cited, referenced, and trusted by AI systems. As we stated above, without this lens, organizations risk investing in GEO while still judging performance through Website positioning metrics designed for a pre-AI search experience.

As Loreal Lynch quotes:

Search is similar game it’s at all times been—now, you only need a special playbook.

GEO KPIs are that playbook’s scoreboard.

What’s Inside

Why Measuring GEO Is Fundamentally Different From Website positioning

The moment we attempt to measure GEO using Website positioning logic, the cracks start to point out.

The research paper “Generative Engine Optimization (GEO): The Mechanics, Strategy, and Economic Impact of the Post-Search Era” makes this distinction explicit:

Website positioning is built for ranked retrieval systems, while GEO operates inside probabilistic, generative systems.

As you already know, in traditional Website positioning, performance measurement assumes a stable output. Pages are indexed, ranked, and presented as links. Metrics like impressions, clicks, CTR, and average position work since the system is deterministic.

As the said paper explains, Website positioning measurement is tied to retrieval visibility, whether a document is returned and chosen by a user from a listing.

And, GEO operates under a special mechanical model.

Generative engines don’t retrieve and display documents; they pick up and synthesize info to construct answers. So, visibility in generative systems is just not positional. A brand’s content may influence the response without being surfaced as a link and even explicitly cited, which immediately breaks click-based attribution models.

This results in a second fundamental difference: measurement shifts from outcomes to contribution. Website positioning metrics capture outcomes (like traffic, conversions, and rankings); GEO metrics must capture contribution:

- How often a source is incorporated into generated answers,

- How consistently a brand is referenced across prompts,

- Whether its framing persists across different query formulations.

What’s more, generative outputs are non-deterministic by design. That means the identical query can produce different answers depending on prompt phrasing, context windows, or model updates.

As a result, GEO KPIs cannot depend on single-query snapshots. They must track patterns of inclusion, frequency of citation, and persistence over time, something traditional Website positioning tooling was never designed to do.

Finally, generative systems & GEO often collapse the shopper journey by answering questions directly, and it reduces the role of clicks altogether. Influence happens upstream before any visit occurs. This is why GEO KPIs prioritize brand presence inside answers.

| Measurement Dimension | Website positioning | GEO |

|---|---|---|

| System logic | Ranked, document-retrieval systems | Probabilistic, generative answer systems |

| What visibility means | Appearing as a clickable link | Being used or referenced in AI-generated answers |

| Primary success signals | Rankings, impressions, clicks | Mentions, citations, inclusion, consistency |

| Role of traffic | Traffic is the core performance proxy | Influence can exist without clicks |

| Stability over time | Relatively stable and trackable | Varies by prompt, context, and model behavior |

| Measurement focus | Outcome-based metrics | Contribution- and influence-based metrics |

| Attribution model | Direct attribution from user actions | Indirect attribution through knowledge shaping |

| Core query answered | “Did the user click us?” | “Did the model trust and use us?” |

Why Rankings and Traffic Fail as GEO Metrics

For marketers, rankings and traffic have been our safety net for years. They’re familiar, easy to clarify, and embedded in how we report performance. But in a generative search environment, they don’t tell the total story.

The very first thing that breaks is rankings. Generative systems don’t work with fixed positions. There isn’t a page one to win or a stable slot to defend. Answers are assembled in real time, shaped by context, phrasing, and intent. Ask the identical query twice, and chances are you’ll not get the identical sources, or any sources in any respect. That’s why rankings fail as generative search metrics.

Traffic, however, fails more quietly. And that’s what makes it dangerous. Generative search often resolves the user’s need on the spot. The answer is true there. Decisions are made with no click ever happening.

And, as an alternative of asking how many individuals landed in your site, as traffic does, GEO performance measurement asks different questions:

- Are we showing up inside answers?

- Are we being referenced consistently when this topic comes up?

- Is the way in which AI explains this space aligned with how we would like to be understood?

These are generative search metrics rooted in presence and influence.

As we mentioned earlier, this isn’t about throwing Website positioning metrics away. Rankings and traffic still matter for traditional discovery paths. But after we depend on them to judge GEO, they systematically understate impact.

GEO performance measurement only works after we accept that a number of the most dear interactions now occur before the press, and that shift also changes how teams take into consideration investment, expectations, and even GEO service pricing. When influence replaces traffic as the first signal, value needs to be measured otherwise too.

Let’s be more specific:

Visibility vs Presence in AI-Generated Answers

To keep constructing on the identical idea, this can be a useful technique to make GEO feel more practical and fewer abstract. Think of visibility as what you see, and presence as what the AI actually relies on.

- Visibility = your brand appears.

- Your name shows up in an AI answer,

- Your page is cited once,

- You can screenshot it and share it internally

- Presence = your content is doing the work.

- The AI uses your explanation, definition, or data

- Your framing shows up even when your name doesn’t

- The answer “appears like” the way you talk in regards to the topic.

- Visibility is one-off. Presence repeats.

- You appear for one prompt → visibility,

- You appear again for similar questions → presence

- Visibility is simple to identify

- Mentions

- Citations

- Links

- Presence takes more intention to trace:

What Counts as a GEO KPI (And What Doesn’t)

Once teams accept that GEO needs different measurement logic, the following query is frequently very practical: what will we actually track? And what should we stop pretending matters?

Here’s a bunch of inquiries to separate what counts as a GEO KPI from what doesn’t:

- Inclusion in AI-generated answers: Are you showing up inside answers for relevant prompts?

- Citation or source usage frequency: When sources are shown, how often is your content chosen in comparison with competitors?

- Brand mention consistency: Does your brand appear reliably when your category or problem space is discussed?

- Prompt-level presence: Are you included across variations of the identical query (how-to, comparison, definition, follow-up)?

- Narrative alignment: Does the AI explain the subject in a way that matches your positioning, language, or viewpoint?

- Share of generative voice: Among all brands referenced in AI answers for a subject, how often are you included?

These are the signals that support real GEO performance measurement, since they capture contribution, not only exposure.

And what doesn’t count as a GEO KPI (by itself)?

- Organic rankings: There isn’t any stable rating system inside generative answers. Tracking positions creates false confidence.

- Sessions and pageviews: Generative search often resolves intent with no click.

- CTR from traditional SERPs: CTR assumes a listing of options. Generative answers remove that selection altogether.

- Keyword-level traffic trends: Prompts replace keywords. One prompt can pull from dozens of concepts, making keyword-only tracking incomplete.

- Single screenshots of AI answers: A moment in time is just not performance. GEO is about patterns, not proof-of-life examples.

If a metric only tells you what happened after the press, it’s probably not a GEO KPI. If it helps you understand whether and the way the model used you, it probably is.

Qualitative GEO Signals That Matter More Than Numbers

If we keep following the identical line of pondering, that is where GEO really starts to feel different from anything we’ve measured before.

In fact, a number of the strongest signals of AI search visibility are stuff you notice only once you decelerate and have a look at the answers themselves. What are these?

- How your brand is explained: Generative systems prioritize semantic clarity and coherence, so when descriptions consistently match how you ought to be understood, that’s a powerful indicator of real visibility, even in case your AI search visibility metrics don’t spike immediately.

- Whether your ideas show up without your name: Generative systems often reuse trusted explanations without attribution. When your frameworks, definitions, or reasoning appear in answers, that’s influence.

- Consistency across similar questions: Repetition across prompt variations is a stronger signal than one-off exposure. This form of pattern is at the center of meaningful generative search tracking.

- Depth of inclusion contained in the answer: There’s a giant difference between being listed and being relied on. When AI uses your content to clarify how something works or why it matters, that signals deeper trust.

- Confidence of the model’s language: Generative systems hedge when confidence is low. When your brand is referenced with certainty, it reflects stronger internal trust. This is a qualitative signal that the research links on to perceived authority.

- Presence in follow-up answers: When your brand continues to look as questions get more specific, that’s an indication of sustained relevance, something raw AI search visibility metrics rarely capture on their very own.

Before closing that section, let’s remember: In GEO, those “how” and “why” signals often matter greater than volume, because they reveal whether generative systems truly trust your content enough to maintain using it. That’s the muse of durable visibility in AI-generated answers and the true goal of measuring AI search visibility in a generative-first world.

Now, it’s time to deal with two principal qualitative GEO signals: Brand positioning and and comparative mentions.

Brand Positioning Inside AI Responses

Here is a stat pointing to a shift that’s already beginning to surface in how brands take into consideration GEO:

By 2027, Gartner expects 20% of brands to actively position themselves across the absence of AI in their business or products. It’s a form of response to trust. When 72% of consumers say they consider AI-generated content can spread false or misleading information, and confidence in AI outperforming humans is eroding, brands are reacting to perception as much as capability.

Regarding brand positioning and AI, Emily Weiss, Senior Principal Researcher in the Gartner, says:

Mistrust and insecurity in AI’s abilities will drive some consumers to search out AI-free brands and interactions. A subsection of brands will shun AI and prioritize more human positioning. This ‘acoustic’ concept will probably be leveraged to distance brands from perceptions of AI-powered businesses as impersonal and homogeneous.

What this implies for GEO is straightforward but necessary: brand positioning inside AI-generated answers is about what you represent when you’re included.

Gartner’s idea of “acoustic” branding is about differentiation in a world where AI-driven experiences are beginning to feel interchangeable.

Here’s how that plays out inside AI responses:

- AI encodes perception.

- Absence can change into a positioning signal.

- Generative systems reflect dominant narratives.

- Homogeneity is the true risk.

- Trust is becoming a differentiator.

So, inside AI-generated answers, positioning is about trust, tone, and intent. And, after all, in a market where confidence in AI is fragile, those signals can matter greater than “raw exposure.”

Comparative Mentions vs Competitors

Are we showing up more (or less) than our competitors inside AI-generated answers?

This is the purpose comparative mentions change into a critical lens for understanding real AI search visibility.

According to the Stan Ventures breakdown of Website positioning KPIs for AI search, traditional metrics fail because AI-driven answers often reference multiple brands directly. It means framing them side by side inside a single response moderately than forcing a winner-takes-all rating model In this environment, visibility is relative. And it is completely about who appears with you.

Here’s find out how to take into consideration comparative mentions in a GEO context:

According to the identical research, generative systems regularly list, compare, or recommend multiple brands in one response. And these side-by-side mentions replace “traditional” rankings, making comparative presence a more meaningful KPI than position alone.

When competitors are mentioned alongside you, concentrate to how the AI differentiates them.

RevenueZen’s article on measuring AI search visibility highlights that mentions mean a lot:

This is your recent “share of voice” in the AI world. If ChatGPT, Gemini, or Perplexity keeps mentioning your brand when users ask about your category, you’re already winning. No click required. Just consistent visibility.

On the opposite hand, one-off comparisons don’t mean much. That’s why brands should track how often they’re mentioned relative to competitors across multiple AI-generated responses.

If competitors appear in AI answers where your brand is missing, that gap is more actionable than a drop in rankings. Absence in AI responses often indicates weaker semantic relevance or trust signals.

In short, comparative mentions are the generative-era equivalent of competitive rankings. They show whether you’re competitive inside AI-generated narratives. In GEO, winning is being the brand AI chooses to incorporate, explain, and compare favorably when alternatives are presented.

How to Track GEO Performance in Practice

First of all, GEO performance isn’t tracked with a single metric or tool. It’s tracked through a mix of quantitative signals and structured statement.

As we stated before, unlike Website positioning, GEO performance doesn’t live in one dashboard by default. Generative answers are dynamic, prompt-driven, and infrequently opaque. It’s to know where, how, and the way often your brand is utilized by AI systems when answers are generated.

“12 recent KPIs for the generative AI search era” provides a transparent and layered technique to track GEO performance. The author of the piece (Duane Forrester) is obvious about one thing: “These are simply my ideas. A start line. Agree or don’t. Use them or don’t.”

That said, we consider these are the proper GEO KPIs to begin with. Not because they’re perfect, but because they reflect how generative systems actually work today.

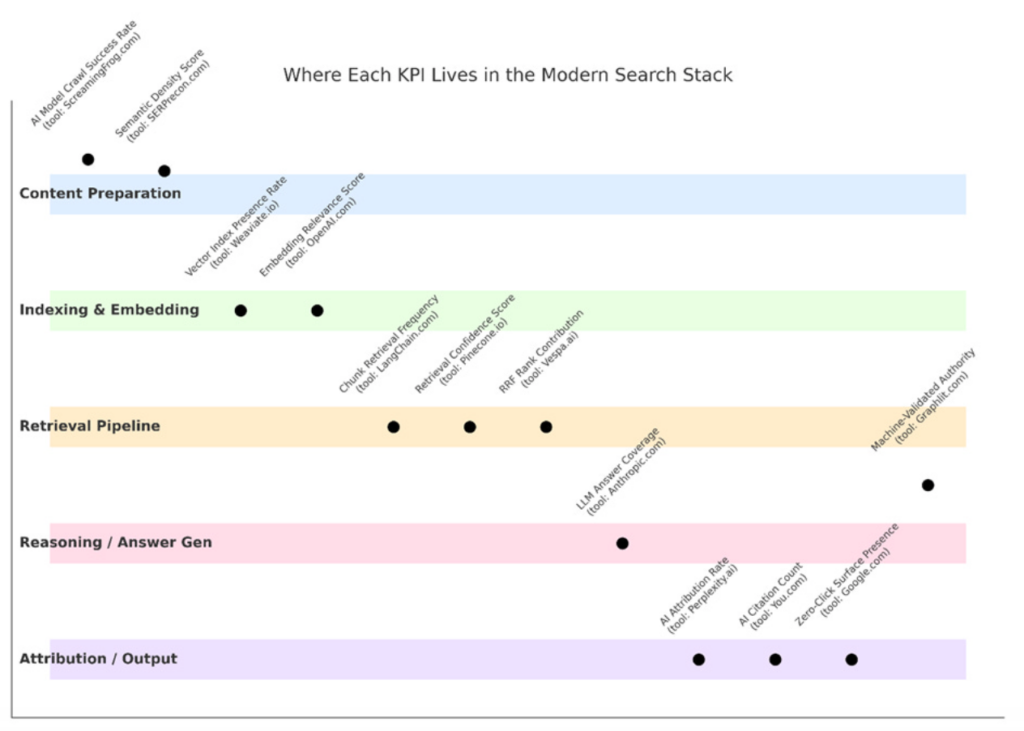

Key ways to trace GEO performance in practice:

- Chunk retrieval frequency: How often your content blocks are retrieved in response to prompts.

- Embedding relevance rating: How closely your content matches the intent of a question on the vector level.

- Attribution rate in AI outputs: How often your brand or site is cited in AI-generated answers.

- AI citation count: Total variety of times your content is referenced across LLM responses.

- Vector index presence rate: The percentage of your content successfully indexed in vector databases.

- Retrieval confidence rating: How confidently the model selects your content during retrieval.

- RRF rank contribution: The influence your content has on final answer rating after re-ranking.

- LLM answer coverage: The variety of distinct prompts your content helps answer.

- AI model crawl success rate: How much of your site AI crawlers can access and ingest.

- Semantic density rating: How information-rich and conceptually connected each content block is.

- Zero-click surface presence: How often you appear in AI answers without generating a click.

- Machine-validated authority: Your perceived authority as evaluated by AI systems, not links.

At that time, we expect we want to review the GEO and Website positioning KPI comparison. Ken Marshall’s article “Use These KPIs To Measure Success With Your Generative Engine Optimization (GEO) Campaign” gives us a transparent picture:

Source: Use These KPIs To Measure Success With Your Generative Engine Optimization (GEO) Campaign

So far, we have now explored find out how to track GEO performance and key GEO KPIs, with a comparison to traditional search KPIs. The next topic is common mistakes brands & marketers make when tracking generative search results.

H2 – Common Mistakes Teams Make When Measuring Generative Search

Let’s repeat: Generative search looks familiar on the surface, nevertheless it behaves otherwise underneath. And most mistakes come from measuring outcomes as an alternative of influence.

Here are essentially the most common pitfalls when measuring generative search:

- Treating AI visibility like rankings with a brand new name: AI systems don’t rank pages; they assemble answers. Trying to trace positions or equivalents leads teams to chase signals that don’t actually exist.

- Relying on traffic to validate success: When teams judge GEO purely by sessions or conversions, they miss the worth happening contained in the AI response itself

- Over-celebrating single AI mentions: Repeat inclusion across prompts is what matters. One-off visibility doesn’t equal durable presence.

- Ignoring who you appear next to: AI answers often mention multiple brands together. As we mentioned before, comparative visibility is a key GEO signal. Teams that track “did we appear?” but ignore who else appeared miss how AI is definitely positioning them in the market.

- Focusing only on citations: Attribution is useful, nevertheless it’s incomplete. AI systems usually paraphrase or reuse content without citing it.

- Tracking too narrow a set of prompts: Generative visibility changes with phrasing. Measuring performance on a handful of prompts creates a distorted picture. We recommend broader prompt coverage to know consistency and resilience.

- Expecting stability too quickly: AI outputs change. Models update. Answers fluctuate. GEO measurement is about trends over time, not day-to-day precision. Teams that expect Website positioning-style stability often quit before patterns emerge.

The common thread here is expectation. A successful GEO agency knows that effective GEO measurement comes from accepting that presence, consistency, and influence matter greater than clicks and rankings.

At that time, we also remind you of something that’s easy to miss in GEO conversations: GEO metrics don’t live in one place, they live across the complete AI search stack. Brand visibility in AI-generated answers is just not the results of a single system or signal. It’s the consequence of how your content moves through multiple technical layers, each with its own logic and success criteria.

Forrester’s graphic on where each KPI lives in the fashionable search stack says a lot about that theory:

Source: 12 recent KPIs for the generative AI search era

GEO KPIs for Different Business Goals

And here is one other mistake teams make with GEO is attempting to apply the identical KPIs to each objective. Generative search doesn’t work that way. The signals that matter for brand credibility are usually not the identical ones that matter for pipeline creation or competitive pressure.

GEO KPIs only change into useful once they’re anchored to a transparent business goal.

Below, we break GEO measurement down by intent.

GEO Metrics for Brand Authority

Authority in generative search shows up through consistency and reuse.

The GEO KPIs that matter most here deal with how AI systems depend on your brand:

- AI answer inclusion rate: How often your brand appears in answers for category-level or educational prompts.

- Narrative alignment: Whether AI explanations reflect your language, framing, and viewpoint.

- Depth of inclusion: Are you used to clarify why or how something works, not only listed as an option?

- Persistence across prompts: Does your brand keep showing up when similar questions are asked alternative ways?

- Machine-validated authority: Whether AI systems treat your content as reliable enough to reuse repeatedly.

GEO Metrics for Demand Generation

When GEO is tied to demand generation, the goal shifts from being informative to being influential in the meanwhile of selection.

At this stage, a very powerful query is straightforward: are we showing up when persons are deciding what to purchase? And if the reply is yes, how are we being positioned?

The GEO metrics that matter most for demand generation deal with business intent and competitive framing:

- Presence in high-intent prompts: Does your brand appear in prompts like:

- best tools for…,

- top platforms for…,

- which solution should I take advantage of for…

- Comparative mention frequency: How often are you mentioned alongside direct competitors in AI-generated answers?

- Role inside the suggestion: Are you positioned as:

- The default selection,

- The premium option,

- Thee easiest to adopt,

- The alternative.

- Zero-click influence: How often does your brand influence AI answers even when no click follows?

- Follow-up answer persistence: Does your brand proceed to look as questions move from general to specific?

For many teams, this can be the purpose where internal complexity shows up. That’s why some organizations conclude that they need a GEO agency to operationalize GEO in a way that connects generative visibility to real demand outcomes.

Done well, GEO for demand generation doesn’t replace performance marketing or Website positioning. It complements them by shaping decisions earlier.

GEO Metrics for Competitive Displacement

For teams focused on winning market share, GEO measurement becomes comparative by nature. And, after all, the goal is to exchange competitors in AI-generated narratives.

The most telling GEO KPIs here highlight relative visibility and role-shift:

- Share of AI mentions vs competitors: How often you’re included in comparison with direct alternatives.

- Absence evaluation: Which prompts do competitors appear for, and you don’t?

- Role substitute signals: What are the cases where you start occupying roles competitors previously held in AI answers?

- Competitive framing changes: How AI contrasts you with others (for instance, moving from “alternative” to “leader”).

- RRF rank contribution in competitive prompts: How much does your content influence final answers when multiple brands compete?

In displacement scenarios, progress often shows up quietly first.

So, yes, generative search is changing how brands get discovered, often in ways in which aren’t immediately obvious.

GEO is beginning to move out of the “interesting idea” phase and into on a regular basis reality. For example, in the USA, GEO agencies have gotten a part of the conversation; not only because teams can’t do that themselves & in-house, but since the landscape is recent and always changing.

In the tip, the brands that come out ahead will probably be those that stay curious, deal with the signals that truly matter, and keep adapting as search continues to evolve.

Read the total article here