ChatGPT is striking fear into the hearts of professionals across every industry, from education and publishing to healthcare and big tech. Apparently, the bot isn’t only going to steal your job, but also put search engines and an array of other technologies out of work.

Of course, few things in the technology industry are as overhyped as anything to do with artificial intelligence. Sensationalist headlines are easy to write but is there any substance to the idea of ChatGPT replacing Google and other search engines?

What is ChatGPT?

ChatGPT (or Chat Generative Pre-Trained Transformer) is an AI chatbot system developed by OpenAI. More specifically, it’s a question answering (QA) model that uses natural language processing (NLP) to understand human questions/prompts and, then, generate answers from the information in its training dataset.

Recently, ChatGPT has gained a lot of attention for its ability to produce clear, concise – and accurate – answers to questions of varying complexity.

The quality of its responses has prompted comparisons with search engines and suggestions that it does a better job than the likes of Google. Increasingly, the question is being asked whether ChatGPT or future versions of the same technology will replace search engines.

Could ChatGPT Replace Search Engines?

The speculation only mounts when you have people like Paul Buchheit – the man who created Gmail – saying AI bots like ChatGPT will “eliminate” search engines within a year or two.

However, ChatGPT isn’t a search engine and, more importantly, it’s neither designed to solve the same problems as a search engine. That being said, it is designed to solve some of the problems search engines face and, arguably, it’s doing a better job than Google.

The first of these problems is natural language processing (NLP), a machine learning technology that aims to understand the meaning of text or speech. ChatGPT’s core function is to understand human prompts and provide human-like responses.

This is where ChatGPT excels but we have to consider the contextual application of the technology here.

Google is trying to understand a query and return the best search results. It’s not formulating its own answers but trying to provide the best existing answers in order of relevance. ChatGPT, on the other hand, tries to understand the prompt and generate its own answer from existing information.

This distinction is important because it makes ChatGPT “better” at certain types of interactions and Google at others. Also, keep in mind that Google is designed to handle a much wider variety of interactions than ChatGPT – something we’ll explore in more detail later.

What Is ChatGPTGood At?

ChatGPT is setting a new benchmark for natural language processing (NLP) and, more specifically, the question answering (QA) discipline. This requires the system to understand the initial question, find relevant information within its training data and produce a suitable answer.

By all accounts, ChatGPT does a very good job of this – within certain limitations.

You can test ChatGPT for yourself by creating an OpenAI account (it’s free to sign up and test). The initial interface lists a set of example prompts along with its capabilities and limitations.

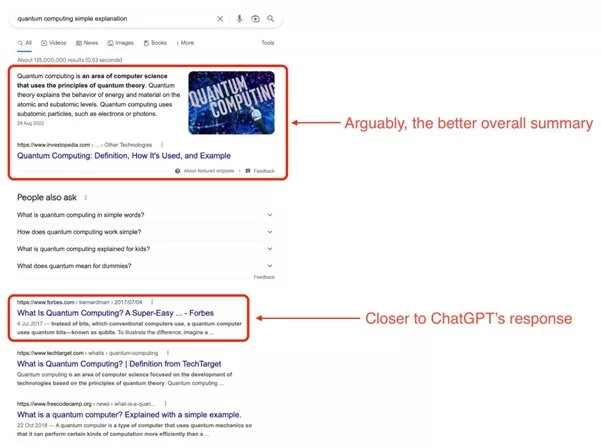

We’ll discuss the limitations in the next section but, first, let’s see how it handles the first example prompt: “Explain quantum computing in simple terms”.

Now, we have to remember ChatGPT has generated this answer from existing information. In other words, this is an AI-generated response that answers the question, makes perfect grammatical sense and does a pretty good job of explaining one aspect of quantum computing (it doesn’t touch on quantum theory at all).

A quick Google search brings up broader definitions of quantum computing (referencing quantum theory, the purpose of studying energy and material at the atomic and subatomic level, etc.) as well as the more operational aspects of quantum computing (bits vs qubits).

Here, Google can offer multiple answers to the same question and, assuming it’s doing its job properly, these answers should all come from experts.

In theory, Google should be providing the best answer(s) to a technical question of this nature – and, in this case, the search giant achieves this. That being said, the ChatGPT arguably does a better job of simplifying complex information here.

ChatGPT’s answer is impressively concise, informative and easy to understand.

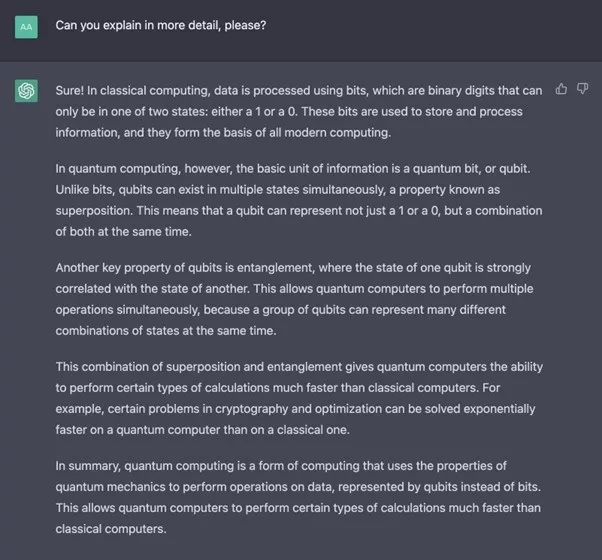

So what happens if we ask ChatGPT to explain quantum computing in more detail?

The response repeats a lot of the same elements from its initial answer but it does expand upon its first explanation. This time, it references quantum mechanics and explains some key properties of qubits but you can start to see where it’s pulling bits of information from different sources and merging them together.

If you compare this answer to the first result from our Google search for “quantum computing simple explanation,” the resulting Investopedia page offers a complete article with the following sections:

- What is quantum computing?

- Understanding quantum computing

- Uses and benefits of quantum computing

- Features of quantum computing

- Limitations of quantum computing

- Quantum computer vs classical computer

- Quantum computers in development (examples)

- FAQs

That’s 1,661 words of content written, reviewed and fact-checked by experts using information from credited sources – from the first result in Google Search.

ChatGPT does what it’s designed to do very well: provide human-like answers to human prompts. However, expecting it to replace something it’s not designed to be (eg: a search engine) is setting it up to fail.

What Are the Limitations of ChatGPT?

As soon as you open the test version of ChatGPT, the interface lists three of its biggest limitations:

- “May occasionally generate incorrect information”

- “May occasionally produce harmful instructions or biased content”

- “Limited knowledge of world and events after 2021”

Right away, this tells us that we can’t rely on ChatGPT to generate accurate information. The big follow-up question is: can we rely on Google and other search engines to provide accurate information?

Well, once again, we have to reiterate that ChatGPT is generating responses from vast amounts of information that already exists. Google, on the other hand, is compiling the web pages it considered most relevant and accurate to the user’s query.

In this sense, Google essentially takes a third-party role and assumes less responsibility.

More importantly, Google is constantly getting better at prioritising content written by experts and people with experience in the subject matter in question.

ChatGPT is basically rehashing information from its dataset and this raises a few key issues:

- It can put the wrong combination of info together and provide inaccurate information (as OpenAI acknowledges).

- It can be factually accurate but misplace emphasis on one aspect of a topic instead of something fundamentally more important

- It can regurgitate common misconceptions

- It struggles to add any depth of information

- It struggles with highly debated subjects

Let’s be clear, search engines and web content aren’t immune to any of the issues listed above – far from it. However, search engines are designed to point users to multiple sources of information, making it easy for them to select trustworthy sources and gain a deeper level of understanding.

ChatGPT is not designed to do this.

In terms of occasionally producing “harmful instructions or biased content,” this is a significant risk with all AI information systems and it’s vital that users are informed of this – something OpenAI rightly does on ChatGPT’s primary interface.

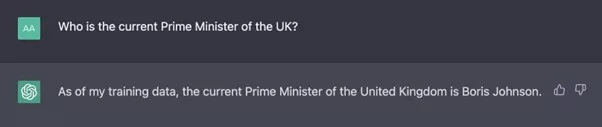

The final limitation OpenAI points out refers to ChatGPT’s training dataset, which only includes data running up to some point in 2021.

In practical terms, this means ChatGPT isn’t great for up-to-date general queries like “who is the current Prime Minister in the UK?”

Of course, we know Google is more than capable of dealing with queries like this.

Likewise, ChatGPT isn’t going to give you very good weather reports for queries like “weather Tokyo” or the latest information on rapidly developing topics like the Covid-19 pandemic.

Takeaway: ChatGPT is not a search engine — let alone a good one

Comparing ChatGPT to a search engine like Google is a flawed experiment from the very beginning. In this article, we’ve tried to look at the most comparable use cases for both technologies, but it’s still not a fair fight.

If we’re comparing the question answer (QA) functions of natural language processing (NLP), ChatGPT is setting the standard right now. However, it’s not applying these technologies to the same use cases as search engines like Google. ChatGPT is a question answer system – and an impressive one, too – but it’s not a search engine in any sense of the definition.

In terms of information delivery, as Vertical Leap, we’ve compared the output of ChatGPT and Google Search but we haven’t even touched on the other interactions search engines perform. Nobody is booking flights through ChatGPT, finding local shops that are open right now or comparing different products.

This doesn’t mean the technology currently powering ChatGPT won’t, someday, power whatever replaces search engines as we know them – but it won’t be ChatGPT itself.

Read the full article here